Why Google Built TPUs and Why Investors Should Care

Inference costs, custom silicon, and what it signals for NVIDIA, Nebius, and AI investors

Disclaimer: This publication and its authors are not licensed investment professionals. Nothing posted on this blog should be construed as investment advice. Do your own research.

There’s currently a lot of buzz around Google’s TPU, especially among investors and here on Substack. This - and given your interest in my last post about AI hardware - I think it’s a good idea to elaborate a bit on what TPUs are, their history and their influence on AI in today’s post.

A short history of TPUs: why they were built

To understand why Tensor Processing Units (TPUs) matter for AI infrastructure investing, it helps to start with the problem they were designed to solve.

By the early 2010s, Google was already running neural networks in production across search ranking, ads, speech recognition, and image classification. These models worked, but the cost of running them at scale was becoming a structural issue. CPUs were too slow and inefficient. GPUs helped, but they were designed for graphics first and carried a lot of flexibility that translated into wasted power and higher operating costs when used continuously for inference.

This is the context in which TPUs emerged.

Training vs inference: What you need to know to understand TPUs

Before talking about TPUs in detail, it is worth slowing down on a distinction that shapes almost every AI cost curve: training versus inference.

Training is the phase where a model learns. It is compute-intensive, episodic, and highly visible. Large clusters spin up, capital is deployed in bursts, and progress is easy to market. This is the phase that produces headlines, benchmark charts, and capex shock numbers. From the outside, it looks like the center of gravity in AI.

A concrete example is large language models like OpenAI’s GPT series. Training a new generation involves running massive datasets through thousands of GPUs or accelerators for weeks or months. This is where you see headline numbers around capex, energy usage, and hardware shortages. Once the run is finished, the cluster can be reallocated or powered down.

Another example is recommendation models at companies like Netflix or Meta. These models are retrained periodically as user behavior shifts. The training jobs are large but scheduled. They are part of an operational cycle, not a constant drain.

Inference is what happens after the model exists. Every prompt, every search ranking, every recommendation, every generated token runs through inference. It is continuous, predictable, and quietly expensive. Inference does not happen once per quarter. It happens millions or billions of times per day, and it shows up directly in cost of revenue.

Every time someone types a query into a search engine at Google, multiple models run inference to rank results, filter spam, translate languages, and personalize outcomes. Each individual query is cheap. Billions of them are not.

When a user asks a question in a chatbot powered by models from OpenAI, inference runs for every token generated. The longer the answer, the higher the cost. This happens continuously, regardless of whether a new model is being trained that week.

In e-commerce, companies like Amazon run inference constantly to power recommendations, search ranking, fraud detection, and pricing systems. These models may be retrained occasionally, but inference never stops. It is part of the baseline cost of doing business.

At small scale, this distinction barely matters. Training dominates costs, inference is negligible, and general-purpose hardware feels flexible enough. At large scale, the balance flips. Training becomes a smaller share of lifetime cost, while inference becomes the permanent load the business has to carry.

This is the point where incentives change and why TPUs were invented:

What a TPU actually is

A Tensor Processing Unit (TPU) is a custom-built application-specific integrated circuit (ASIC) designed specifically to accelerate machine learning workloads, especially dense tensor operations such as matrix multiplication. Unlike CPUs, which are general-purpose processors, or GPUs, which are broad parallel accelerators, TPUs are intentionally narrow. They remove features that are not useful for neural networks in order to maximize efficiency per watt and per dollar.

Google’s own documentation describes them this way:

“Tensor Processing Units (TPUs) are Google’s custom-developed, application-specific integrated circuits (ASICs) used to accelerate machine learning workloads.”

Source: Google Cloud TPU documentation

https://docs.cloud.google.com/tpu/docs/intro-to-tpu

TPUs are not consumer chips. They are deployed inside Google data centers and offered through Google Cloud, typically in large groups called TPU pods, because their economic advantage only appears at scale.

Why Google built TPUs in the first place

Google began developing TPUs internally around 2013, long before AI became a mainstream investment theme. The motivation was not performance leadership for its own sake, but cost containment. Neural network inference was growing faster than hardware efficiency, and relying on general-purpose chips meant accepting a cost curve that would only get worse with scale.

An early Google engineering reflection makes this explicit:

“We thought we’d maybe build under 10,000 of them. We ended up building over 100,000 to support all kinds of great stuff including Ads, Search, speech projects, AlphaGo.”

Andy Swing, Principal Engineer, Google TPU team

Source: Google Cloud blog

https://cloud.google.com/transform/ai-specialized-chips-tpu-history-gen-ai

The first publicly disclosed TPU, announced in 2016, was built primarily for inference, not training. That detail is easy to overlook, but it matters. Inference is the always-on cost center. Training is episodic. Google was optimizing the part of the AI lifecycle that shows up every second in operating expenses.

What the efficiency gains looked like

In Google’s original academic paper introducing TPUs, the authors quantified just how inefficient general-purpose hardware had become for neural network workloads:

“The TPU delivered 15–30× higher performance and 30–80× higher performance-per-watt than contemporary CPUs and GPUs.”

Norman P. Jouppi et al., “In-Datacenter Performance Analysis of a Tensor Processing Unit”

Source: https://en.wikipedia.org/wiki/Tensor_Processing_Unit

Those numbers were not about peak benchmarks. They reflected real production inference workloads inside Google’s data centers.

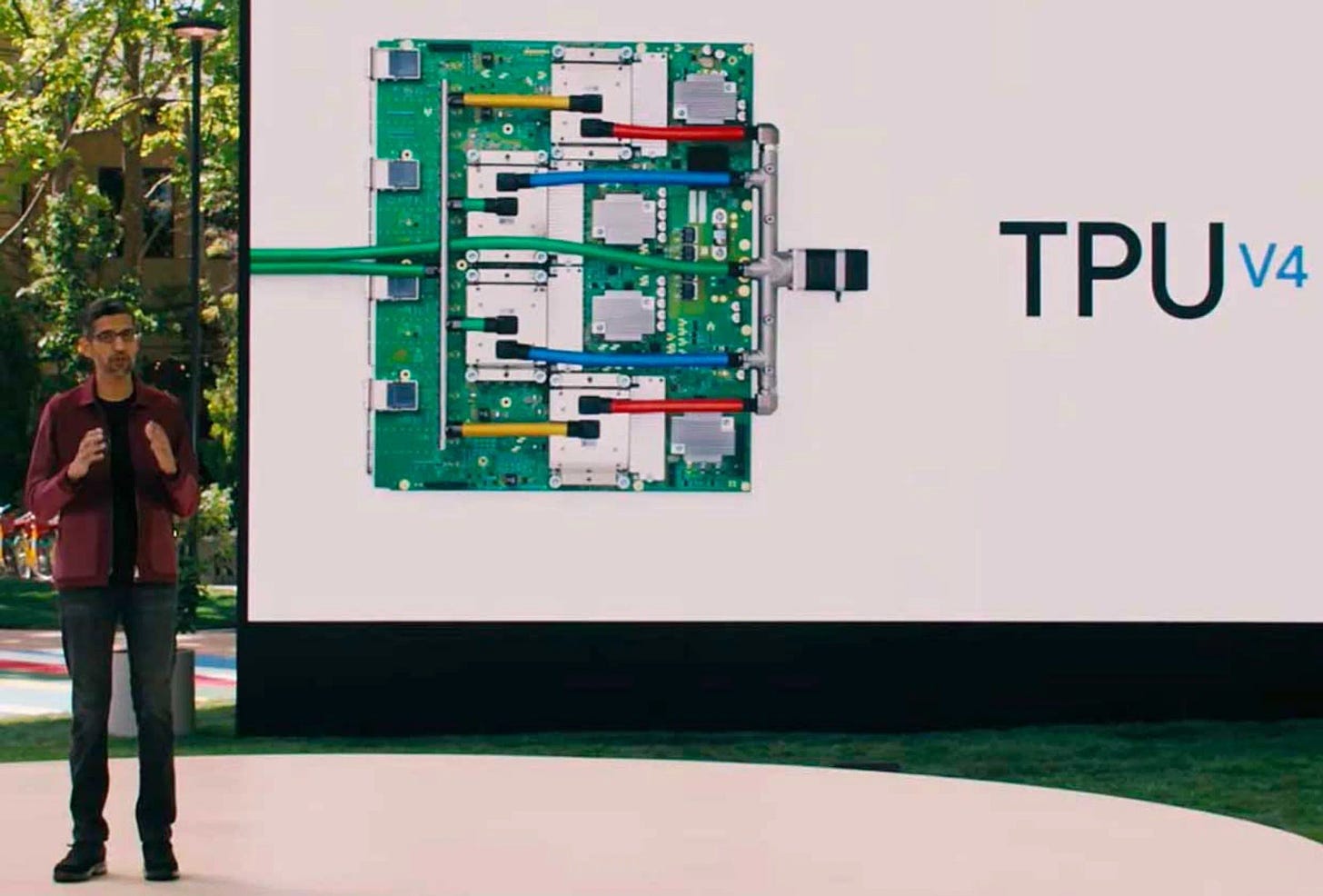

As TPUs evolved, later generations expanded into training and large-scale model deployment, but the design center remained the same: predictable performance, high utilization, and lower marginal cost at scale. The most recent generations, such as Google’s inference-focused TPU platforms, continue to emphasize efficiency rather than raw flexibility.

Industry analysts like UncoverAlpha on Substack have summarized this positioning succinctly:

“TPUs are not faster GPUs. They are cheaper answers to a narrower question.”

Source: Uncover Alpha

Why this history matters for investors

This history clarifies intent: TPUs were not a reaction to hype, nor an attempt to compete in the merchant chip market. They were a response to an internal cost curve that threatened long-term scalability.

From an investing perspective, that distinction is critical. When a company commits to custom silicon, it is usually because renting general-purpose infrastructure no longer works economically. TPUs are a visible example of how AI, once it reaches scale, stops behaving like software and starts behaving like infrastructure.

AI looks like software, but behaves like infrastructure

At small scale, AI behaves like software. You rent GPUs, ship features, and costs feel elastic. You can always optimize later. This phase is intoxicating, and it produces most of the narratives investors hear.

At scale, AI behaves like infrastructure. Power, memory bandwidth, utilization, and latency move from engineering trivia into margin drivers. Inference does not spike once a quarter like training runs. It runs every minute of every day, and it shows up directly in cost of revenue.

TPUs exist because this transition happens faster than people expect. Google did not build them to win benchmarks. It built them because renting general-purpose accelerators at hyperscale turns variable costs into a permanent tax.

Training gets the headlines, inference pays the bills

Training dominates public discussion because it is visible. Giant clusters, massive capex numbers, new model releases. Inference is quieter and far more important economically.

Once a model is deployed, inference becomes the long tail of cost. Every user query, every autocomplete, every recommendation request burns compute. Even modest inefficiencies compound brutally when multiplied by billions of requests.

TPUs are increasingly tuned for this reality. They are optimized around throughput per watt, stable latency, and predictable scaling rather than raw flexibility. That tells you something about where Google sees the long-term cost center.

For investors, the implication is simple. Any AI business that cannot structurally reduce inference cost over time is running uphill. Pricing pressure eventually arrives, and when it does, gross margin is the only shock absorber.

Control is the real asset

From the outside, TPUs look like a technical preference. From the inside, they are about control.

By building its own silicon, Alphabet controls its cost curve, its hardware roadmap, and its deployment cadence. It is not exposed to supplier pricing power in the same way a pure renter is. That does not mean TPUs are cheaper in every scenario. It means the company decides when and how cost improvements show up.

This distinction matters more than people realize. Companies that rent their entire AI stack inherit someone else’s incentives. When demand spikes, they pay more. When supply tightens, they wait. When pricing changes, margins move whether they want them to or not.

The GPU monoculture problem

The current AI stack has a monoculture risk. A single architecture dominates, a single ecosystem defines tooling, and a single supplier captures most of the value. From a momentum and economic perspective, this looks unbeatable.

From a systems perspective, it is fragile.

When everyone depends on the same supply chain, pricing power concentrates. When demand overshoots supply, margins compress downstream. When innovation slows, the entire ecosystem feels it.

TPUs exist partly as an escape hatch. Not a replacement for GPUs everywhere, but a pressure valve. They remind investors that diversification at the infrastructure layer is not about performance bragging rights. It is about resilience.

This is where understanding the role of NVIDIA becomes more nuanced. Owning the toll road is incredibly lucrative, but toll roads are cyclical assets when alternatives mature.

How TPUs could break out beyond Google’s environment

Historically, TPUs have lived almost entirely inside Google’s own walls. Unlike GPUs, which are sold broadly and show up in every major cloud and data center, TPUs have been tightly coupled to Google’s internal infrastructure and to Google Cloud. That constraint is not accidental. It reflects a deliberate strategy: TPUs were designed first to solve Google’s own cost problems, not to become a merchant chip.

What has changed is not the architecture, but the pressure on the rest of the ecosystem.

From internal cost lever to external option

Google has already crossed one important threshold by offering TPUs to third parties via Google Cloud. That move alone shifted TPUs from “internal optimization” to “commercial infrastructure,” even if still within a controlled environment.

As Google itself describes it:

“Cloud TPUs are designed to accelerate machine learning workloads and make Google’s internal ML infrastructure available to customers.”

Source: Google Cloud TPU documentation

https://docs.cloud.google.com/tpu/docs/intro-to-tpu

The more interesting question for investors is whether TPUs remain a Google-only cloud feature or evolve into something closer to a multi-tenant, multi-hyperscaler option.

Signals from hyperscaler-scale customers

In late 2024 and 2025, reports began to surface suggesting that very large AI users were actively exploring TPUs as an alternative to exclusive GPU dependence.

Reuters reported that Google was working with Meta to reduce Nvidia’s software advantage by improving TPU support for widely used frameworks:

“Google is working with Meta Platforms to improve support for AI software on its in-house Tensor Processing Units, a move aimed at reducing Nvidia’s dominance in AI chips.”

Source: Reuters

https://www.reuters.com/business/google-works-erode-nvidias-software-advantage-with-metas-help-2025-12-17/

That article matters less for the Meta angle and more for what it implies: Google is investing in ecosystem compatibility, not just hardware performance. That is a prerequisite for TPUs to travel beyond Google-native workloads.

Separate industry reporting has also noted that Meta has explored large-scale TPU usage through Google Cloud as part of its broader effort to diversify away from single-vendor GPU exposure.

“Meta is reportedly considering Google’s TPU chips as part of its effort to reduce reliance on Nvidia for AI infrastructure.”

Source: AI Certs industry report

https://www.aicerts.ai/news/meta-eyes-google-tpu-chips-in-high-stakes-ai-partnership/

None of this means TPUs are about to be sold like off-the-shelf GPUs. But it does suggest that, at hyperscaler scale, the desire for architectural leverage is growing.

Software is the real gatekeeper

One of the biggest historical blockers for TPUs outside Google was tooling. NVIDIA’s CUDA became the de facto standard for AI development, and most production pipelines were built around it. TPUs, by contrast, were initially optimized for TensorFlow and Google-internal stacks.

Google has been explicit about trying to lower this barrier:

“We’re investing heavily in making TPUs easier to use with popular frameworks so customers don’t have to rewrite their models.”

Source: Google Cloud blog

https://cloud.google.com/blog/products/ai-machine-learning/an-in-depth-look-at-googles-first-tensor-processing-unit-tpu

From an investor perspective, this is the most important signal. If TPUs remain software-isolated, they stay niche. If they become genuinely first-class citizens in frameworks like PyTorch, they become an economic alternative, not just a technical one.

Why a TPU breakout would matter

If TPUs ever move beyond Google Cloud into colocated environments, joint ventures, or tightly integrated partnerships with other hyperscalers, the impact would not be about performance leadership. It would be about negotiating power.

For the AI infrastructure market, that would mean:

More leverage against single-vendor pricing like NVIDIA

More pressure on GPU margins at the largest scale

More incentive for diversified compute strategies

This does not “kill” NVIDIA. But it does introduce a credible reminder that its largest customers are not passive. They are actively looking for ways to internalize costs and reduce dependency.

TPUs escaping Google’s silo would not be a sudden disruption. It would be a slow, structural shift, visible first in contracts and capex decisions rather than benchmarks. For investors, those are exactly the signals that tend to matter most over the long run.

How TPUs could affect NVIDIA and Nebius

Understanding TPUs is useful not because they replace GPUs everywhere, but because they change the power balance in the AI infrastructure stack. That shift has very different implications for companies like NVIDIA and Nebius.

NVIDIA: pricing power with an expiration date, not a cliff

NVIDIA’s position today is extraordinary. It controls the dominant hardware platform, the dominant software ecosystem, and most of the marginal dollars flowing into AI compute. From an investor perspective, that looks like a textbook toll booth.

TPUs do not invalidate this. In fact, in the near term they arguably reinforce it. The existence of TPUs signals just how expensive and strategically important AI compute has become. That validates NVIDIA’s margins rather than undermining them.

Every large-scale TPU deployment is a reminder that NVIDIA’s biggest customers are also its most motivated future competitors. Hyperscalers do not build custom silicon because GPUs are bad products. They do it because paying a perpetual margin to an external supplier becomes painful once inference volume stabilizes and grows predictably.

For NVIDIA, this creates a subtle but important dynamic: Revenue growth can remain strong while pricing power slowly caps out and unit volumes can rise even as hyperscalers work to reduce long-term dependence.

This means the the risk is not sudden displacement, but margin compression, starting with the largest, most sophisticated buyers.

From the outside, NVIDIA still looks untouchable. From the inside, TPUs represent the point where customers stop asking “how fast can we scale?” and start asking “how much control do we really have?”

That does not break the NVIDIA story, but it reframes it as a cyclical infrastructure business with unusually strong near-term leverage rather than an infinitely compounding software-like asset.

Nebius: caught between simplicity and dependence

Nebius sits on the opposite side of the control equation.

As a cloud and AI infrastructure provider without proprietary silicon, Nebius benefits from NVIDIA’s ecosystem and performance leadership, but it also inherits NVIDIA’s cost structure. Every improvement in GPU pricing or efficiency helps Nebius. Every supply constraint or pricing shift flows directly into its margins.

TPUs matter here not because Nebius will deploy them tomorrow, but because they highlight the structural disadvantage of being a compute renter in a world where AI inference becomes the dominant workload instead of training new models.

If hyperscalers can run inference more cheaply on their own silicon, they gain room to compete more aggressively on price for large customers and absorb cost volatility internally.

Nebius, by contrast, must either pass costs through or accept margin pressure. At small scale, this is manageable. At large scale, it becomes a strategic ceiling.

From an investor perspective, this does not mean Nebius cannot succeed. It means its upside is more tightly coupled to NVIDIA’s roadmap and pricing discipline. TPUs expose that dependency clearly. Nebius is building on top of someone else’s product.

The broader takeaway for investors of NVIDIA & Nebius

TPUs do not “kill” GPU companies, and they do not automatically doom GPU-based clouds. What they do is draw a clean line between infrastructure owners and infrastructure renters.

NVIDIA sits upstream, monetizing everyone’s urgency to deploy AI.

Hyperscalers with TPUs are trying to internalize that urgency into controllable costs.

Providers like Nebius live in the middle, exposed to both sides.

For investors, the mistake is treating these businesses as if they compound in the same way. TPUs make it clear that AI infrastructure rewards control first, efficiency second, and flexibility last.

Once AI becomes boring and inference is becoming a bigger topic, the companies that own their cost curves tend to age better than the ones that rent them.

Closing thoughts

Once you see TPUs as an economic tool rather than a chip, several things shift.

You stop evaluating AI companies solely on model quality and start asking who controls their cost base. You become skeptical of businesses whose gross margins depend on someone else’s roadmap. You treat infrastructure ownership as a form of pricing power, even when it does not show up immediately.

Most importantly, you stop assuming that AI compounding is automatic. Technology overwrites itself constantly. Cost structures are what survive.

TPUs matter because they sit at that intersection. They are not the future of all AI compute. They are proof that, at scale, fundamentals reassert themselves.

For anyone who wants to learn more about hardware for AI, here's the post that I mentioned throughout the article: https://techfundamentals.substack.com/p/intro-to-ai-hardware-for-investors

Love it. Thank you